Let's take a glimpse under the hood of this voicebot engine.

How to maximize correct speech-to-text conversions when it comes to dialects?

Orion Intelligence works on the NELF project (Next Level Flemish Speech Recognition) together with the University of Leuven that aims to improve speech-to-text recognition for dialects within language areas with relatively few speakers such as Flemish, with 6 million speakers.

Through personalized pre-trained sector models for question recognition.

Which techniques are being used?

This technology converts spoken language into text. ASR systems are used in by Orion Intelligence to transform a voice input by phone into a piece of text that can be analyzed further.

This involves identifying which category an input belongs to. In the context of AI, it often refers to categorizing text, images, or other data. Intent detection is a type of classification used in Natural Language Processing (NLP) to determine the user's intention from their input.

This refers to the ability of an AI system to identify and differentiate between various dialects of a language. This than follows the same process as Automatic Speech Recognition.

This process involves identifying and extracting specific pieces of information (entities) from text, such as names, dates, times, locations, etc. It is a crucial part of natural language understanding.

This technique combines information retrieval with text generation. It retrieves relevant information from a dataset and uses it to generate a more accurate and contextually appropriate response.

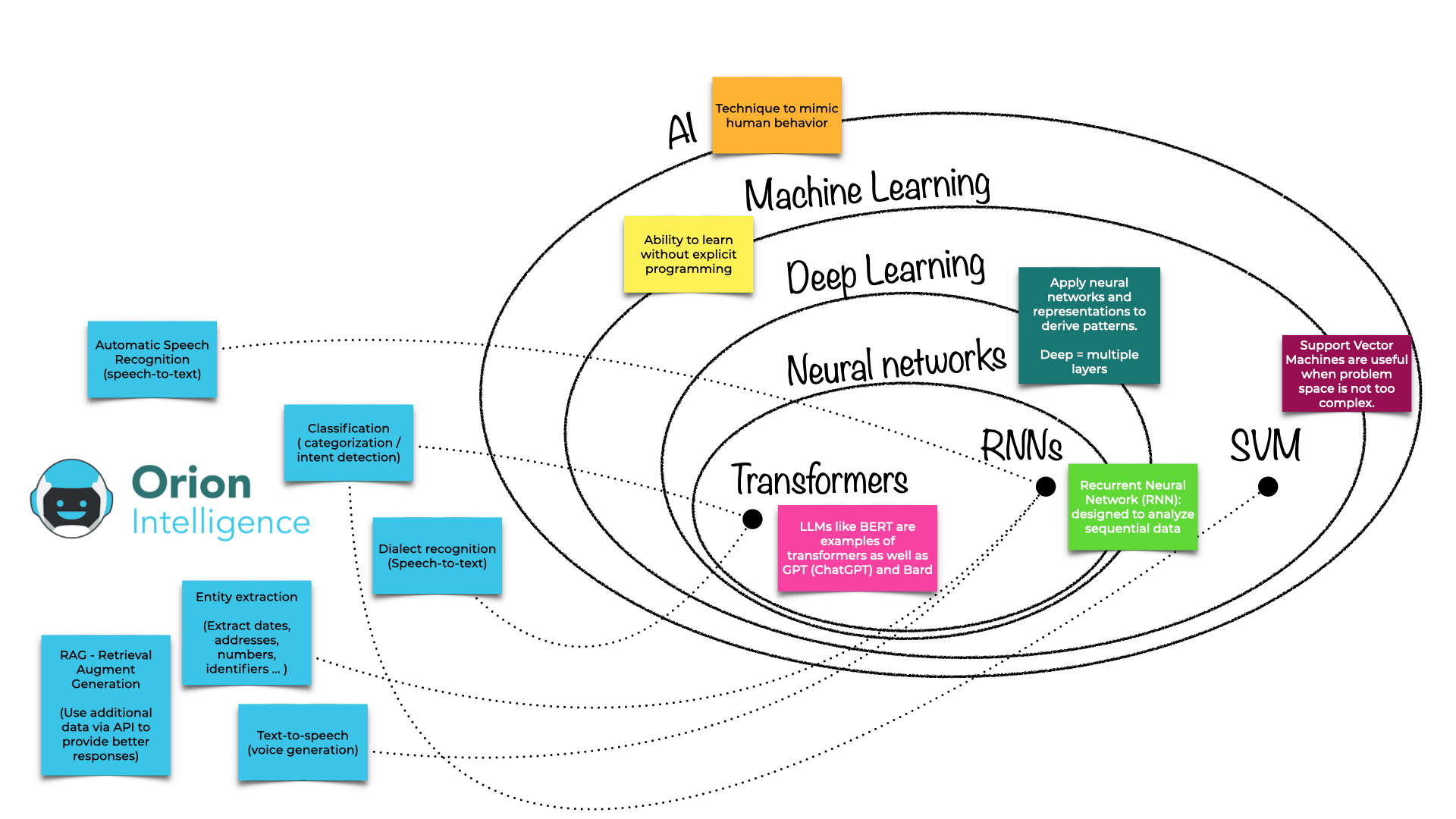

Machine learning is a subdomain in artificial intelligence where systems learn and improve from experience without being explicitly programmed. The system identifies patterns and makes decisions based on data. We distinguish supervised, unsupervised and reinforcement learning as learning techniques.

Neural networks are a type of deep learning model inspired by the human brain. They consist of layers of interconnected nodes (neurons) that process and transform input data to identify patterns and make predictions.

Transformers are a type of neural network architecture designed to handle sequential data, utilizing mechanisms like self-attention to capture relationships between different parts of the input data. Generative AI, often based on transformer models like GPT, is capable of creating new content such as text and images by learning patterns from large datasets.

Large Language Models (LLMs) are advanced AI models trained on vast datasets to understand and generate human-like text. They utilize transformer architecture to capture context and semantics, enabling tasks such as text completion, translation, and summarization. Examples of LLMs include GPT-3, BERT, and Bard, which have revolutionized natural language processing by providing highly coherent and contextually relevant outputs.

Natural Language Processing (NLP) and Natural Language Understanding (NLU) are subfields of AI focused on enabling machines to comprehend and interact with human language. NLP encompasses a range of tasks, including text generation, translation, and sentiment analysis, while NLU aims to grasp the underlying intent and meaning behind the text. Both leverage machine learning techniques to analyze and learn from vast amounts of linguistic data, improving the accuracy and efficiency of language-based AI applications.

SVMs are supervised learning models used for classification tasks, known for their ability to create clear decision boundaries. In Natural Language Processing (NLP), SVMs are applied to tasks such as text classification, sentiment analysis, and spam detection by transforming text data into feature vectors and identifying patterns within the data.

Every day, new innovation is happening in the AI domain. Since the launch of the open-source machine learning library Tensorflow in 2015 new libraries, platforms and techniques developed by a variety of research centers, universities and organisations are popping up every 3 months.

It is our vision to master the various state-of-the-art technologies out there, in order to bring a best-of-breed mix to the table. This allows us to stand on the shoulder of giants and deliver the best possible results to power customer service needs.

Staying up-to-date on latest developments and putting together the right mix of technologies and techniques is our competency. Combining computer science with our experience in the customer service domain together with the ability to integrate, is what makes Orion today.

Under the hood we build AI models that can handle a variety of digital text channels.

Using our technology for voice we can perform a speech-to-text conversion to then analyze that input.

Webforms and contactforms are among the cleanest forms of customer question input.